In pharmaceutical regulatory and medical writing circles, few topics spark more curiosity—or concern—than artificial intelligence (AI).

As the life sciences industry faces increasing pressure to modernize, accelerate submissions, and ensure compliance across global markets, AI has emerged as a powerful enabler—particularly when integrated into structured content authoring (SCA).

When applied properly, AI can support organizations in reusing content more effectively, improving document consistency, and reducing overall submission cycle times. Yet, despite growing adoption and measurable impact, misconceptions about AI persist and often hinder its broader use in regulated settings.

This article unpacks five of the most common myths and clarifies the role AI actually plays in regulatory documentation. Spoiler: it’s not writing your SmPC for you.

Myth #1: “AI Will Replace Medical Writers and Regulatory Authors”

This is perhaps the most pervasive—and unfounded—fear surrounding AI adoption in regulatory writing. AI in SCA environments functions as an assistant—not a replacement.

In practice, AI assists by:

- Identifying content modules suitable for reuse across submissions

- Recommending standardized phrasing from approved language libraries

- Suggesting metadata tags based on content type or therapeutic area

- Flagging inconsistencies between core data sheets and regional variations

These capabilities free writers from redundant tasks that sap time and introduce risk. But writers remain squarely in control: curating, adapting, and validating every piece of regulatory content. AI does not draft entire sections from scratch without oversight, nor does it bypass existing approval workflows.

Just as spellcheck didn’t eliminate editors, AI doesn’t eliminate authors—it simply augments their capabilities.

Myth #2: “AI Introduces Too Much Risk in a Regulated Environment”

In highly regulated industries like pharma, anything that touches submission content raises valid concerns about compliance, traceability, and control.

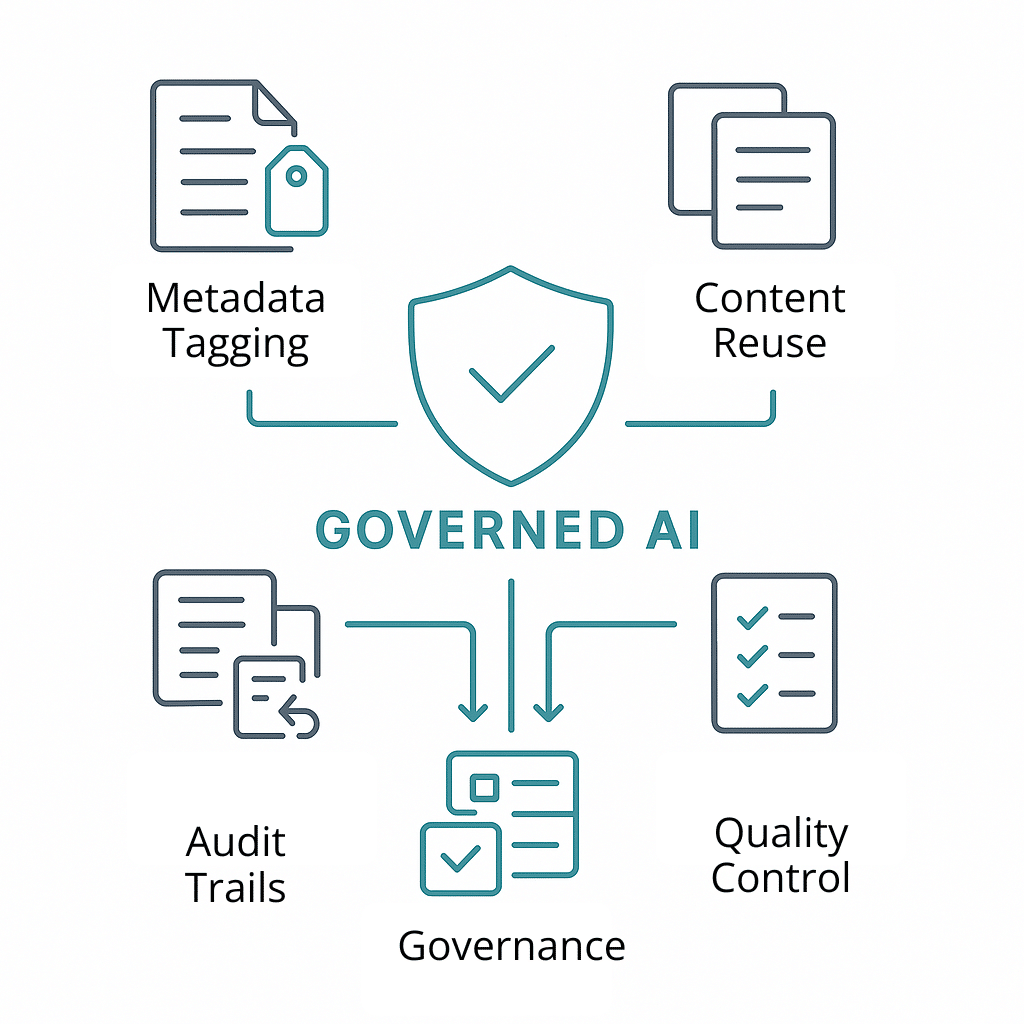

However, modern structured content systems are designed with these exact concerns in mind. When properly implemented, AI can actually reduce risk rather than introduce it.

Here’s how leading platforms address regulatory needs:

- Validated and secure environments: AI is deployed within GxP-compliant platforms with full validation protocols.

- Controlled content sources: AI operates only on approved, version-controlled content modules, not uncontrolled or free-form inputs.

- Audit trails and transparency: Every AI suggestion—whether a metadata tag, phrase recommendation, or content flag—is logged with user actions traceable.

- User control at every step: AI provides suggestions, but users must accept, reject, or edit them. Nothing is applied automatically.

In many cases, AI actually strengthens regulatory rigor. For example, it can detect inconsistent terminology across submissions or flag outdated phrasing that no longer aligns with core regulatory data. These proactive checks catch potential issues early—before they become submission delays.

Myth #3: “AI-Supported Content Can’t Handle Scientific or Regulatory Nuance”

It’s true that regulatory writing requires deep domain expertise. Every word carries weight, particularly in documents like Summary of Product Characteristics (SmPCs), Patient Information Leaflets (PILs), and Clinical Overviews.

But AI is not acting alone—it’s working under the close supervision of subject matter experts. Rather than authoring content autonomously, AI acts as a pattern recognizer and suggestion engine.

For example:

- AI may identify that a specific indication has been worded three different ways across global submissions.

- It may surface the most frequently used, approved version.

- It may flag that a translated version is misaligned with the core document.

At that point, the human author reviews the AI recommendation and determines whether to reuse it, adapt it, or ignore it altogether.

When paired with strong structured content practices—such as consistent tagging, modularization, and governance—AI actually enhances nuance. It provides better visibility into where inconsistencies exist, enabling authors to harmonize content while still accounting for region-specific requirements or scientific updates.

Myth #4: “Implementing AI Requires a Massive Tech Overhaul”

The idea that AI adoption requires a full digital transformation is a common misconception—and one that can paralyze organizations from making progress.

The truth is, you don’t need to rip and replace your current systems. Most structured content platforms and AI tools are designed to be modular and interoperable. They can integrate with your existing regulatory information management (RIM) systems, document management systems (DMS), and publishing platforms.

In fact, the most successful implementations start small:

- Select a single high-volume or high-complexity document type (e.g., EU SmPCs or US PI labeling)

- Break that document into modular components

- Apply metadata manually to seed your structured content library

- Layer AI on top to assist with tagging, consistency checking, and reuse recommendations

- Expand your use cases over time as your team builds confidence and content maturity

This incremental approach lowers the barrier to entry and allows teams to learn, adapt, and demonstrate early wins before scaling enterprise-wide.

Myth #5: “AI Reduces Quality and Editorial Control”

Regulatory authors are justifiably protective of editorial quality. Submission documents must not only be accurate and compliant—they must reflect clarity, consistency, and professional polish.

AI, when applied through structured content, actually improves editorial quality rather than diminishing it.

Here’s why:

- Each content module is governed, version-controlled, and metadata-tagged

- AI suggests but never enforces changes

- Writers retain full control over selection, editing, and final approval

- Reuse of previously approved content reduces human error and re-review cycles

- Formatting, phrasing, and consistency checks are automated, freeing up time for higher-order editorial tasks

The result is fewer manual mistakes, tighter cross-document alignment, and more time for regulatory writers to focus on strategy, clarity, and compliance.

Editorial control isn’t lost—it’s enhanced.

The Real Role of AI in Pharma Documentation

Used within a structured content framework, AI reduces submission cycle time, improves consistency, strengthens compliance, and supports smarter reuse. It handles the heavy lifting of manual checks and formatting so that your experts can focus on high-value content development.

Crucially, AI doesn’t eliminate the role of the medical writer or regulatory author—it elevates it.

By understanding what AI in structured content actually does—and what it doesn’t—we can stop fearing the technology and start using it to advance our documentation practices, accelerate global submissions, and improve patient access to therapies.